AI in the State of Nature

Why global problems like AI require global governance

“The natural state of men, before they entered into society, was a mere war, a war of all men against all men.” - Thomas Hobbes, The Leviathan

“In those days Israel had no king; everyone did as they saw fit.” - Judges 21:25, NIV

The scale and complexity of our modern world has lead to many global challenges that will be difficult to solve. AI, Climate change and nuclear proliferation are all in this category. They are hard because they require coordination, whereas in the current geopolitical system, every state pursues their own self interest to our shared detriment. There are notable exceptions where countries act out of an altruistic expression of their values. This mostly takes the form of rich western countries offering asylum and distributing aid out of a sense of universal humanitarianism.

This idealism is waning, and the West is taking a more transactional approach. As the liberal, rules based order recedes, the West loses its claim to the moral high ground, and we return to a period of realism where everything is about power and strength, not values.

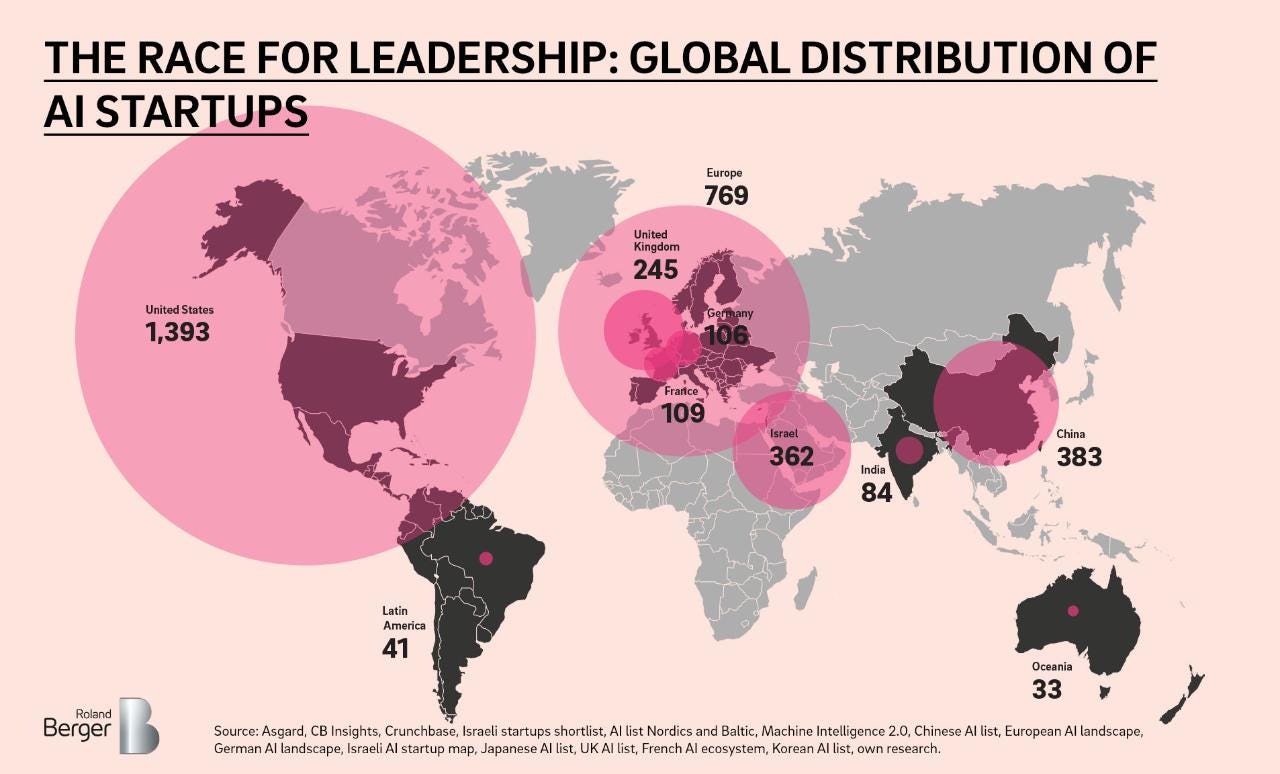

The development of AI is the latest of these coordination problems. Just like nuclear proliferation before it, AI is an arms race. Nations are incentivized to create AI for two reasons. Firstly, AI increases military power. Ukraine showed us the battlefield of the future is one fought with advanced information technology. Secondly, there is an added economic incentive. Advances in AI increase productivity, which increases standards of living. All governments optimize for this alike, or they risk civil unrest. Even the CCP’s “mandate of heaven” comes from the fact they increase living standards year over year. This arms race can only be broken with global regulation.

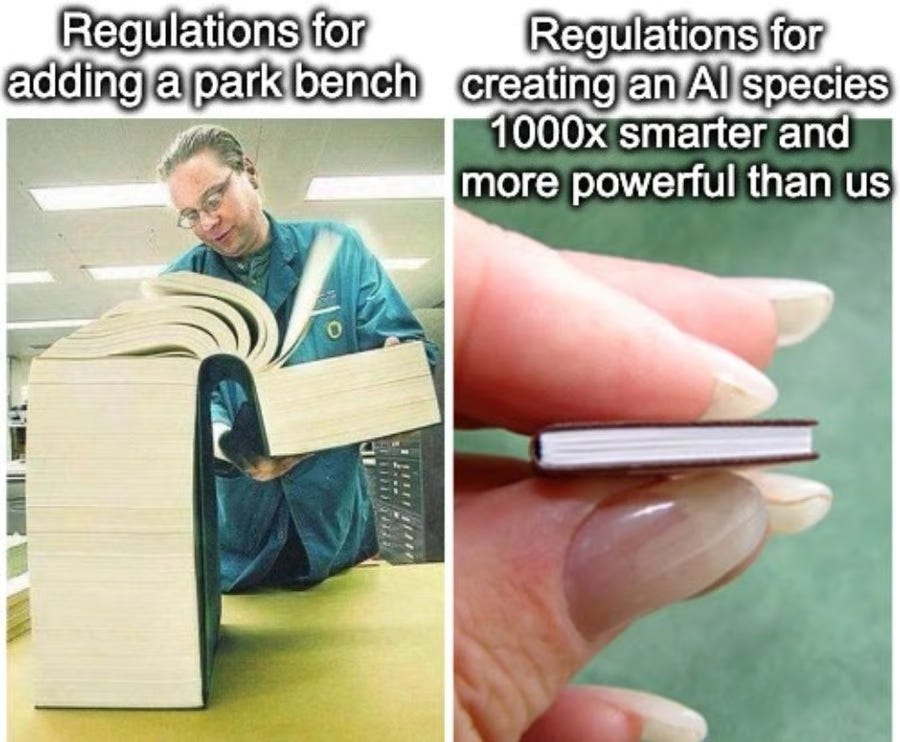

Although opinions among experts on the current AI paradigm of generative models are mixed, concern over the arms race dynamic is universal. I am skeptical of GAI, but we could be only a few breakthroughs away from something truly disruptive. The current approach to regulation is therefore insufficient to protect us - it allows AI to progress unchecked until it reaches a dangerous level of capability. We must therefore act urgently and rigorously to create robust global governance structures that will last, regardless of our opinions on GAI. If we get lucky, GAI will level off and we will have enough time to do so.

US companies dominate AI development with little regulation; governance vs alignment

At the moment, the frontier AI companies are concentrated in San Francisco. Within the United States, companies are locked in a deadly race because there is minimal regulation in American. There was a bill to regulate AI in California (Safe and Secure Innovation for Frontier Artificial Intelligence Models Act) but it was killed via lobbying from AI companies1. There was also federal antitrust action taken as part of the Biden administration against tech companies, for example, with a freeze on mergers and acquisitions. Now things have flipped with Trump; his administration is staffed with big tech guys; the timeline on AI governance has been moved back until 2028 or the Democrats win in the midterms. This is a problem; we need to be setting up governance structures now before things get too powerful, which could happen any day now. Because of lobbying and a laissez faire attitude towards business, the United States cannot be relied upon to regulate AI.

To give them credit, AI companies have a good emphasis on aligning models to human values. For example; Anthropic uses the technique of “Constitutional AI”2 to train their AI models to have a certain character; people generally find their model Claude to be charming and useful. Companies also take cyber threats seriously and do a lot of work to make sure the model weights are secure. Lastly, they also invest a lot in trust and safety to prevent harmful use of the models through their API. Approaching safety from each of these different angles is a defense in depth approach; it basically works well in practice.

However, unlike governance, none of these things act as a serious hand-break on capabilities research. Companies are happy to do alignment, as it makes their products more appealing and less likely to cause them legal problems, but they bristle at governance, as it implies regulation.

The problem with solely focusing on alignment is twofold. Firstly, it is brittle; we build techniques to align a given architecture and it’s unclear how much that transfers to future architectures. In contrast to this, governance is broad and can apply to many different AI architectures as long as they are functionally similar.

Secondly, alignment can also increases capabilities. The best example of this is reinforcement learning from human feedback (RLHF), the technique that turns base models like gpt3 into chat models like chatgpt3. The base models are weird and offensive, chat models are tuned to answer your questions correctly and behave well.

RLHF was developed by world leading AI safety researchers, Paul Christiano and Dario Amodei, as a method to align AI systems with human preferences. They sought to develop AI that was more useful and aligned to users values, and in doing so created a new paradigm that lead to minimally aligned, open source, powerful AI like DeepSeek, as well as spurring on further investment and exacerbating an arms race. In contrast to this, advancement in responsible governance necessarily implies an increase in control.

Antitrust action would block the AI monopolies of tomorrow but is unlikely

We also need regulation and governance for economic reasons; we already know the downsides of unregulated capitalism. The historical analogy writer Cory Doctorow uses for big tech companies is “robber barons”4, wealthy industrialists of the late 19th and early 20th century (such as John Rockefeller, owner of Standard Oil). They followed a playbook for economic dominance that involved the abuse of natural resources, corruption of government, wage slavery, quashing competition through acquisitions, and the creation of monopolies to exert market control.

Is this not exactly how today’s large AI companies behave when they train on copyrighted material, build massive data-centers to pump carbon into the atmosphere, lobby to avoid antitrust, pay starvation wages for human annotators, acquire competitors, and seek to gain market control through regulatory capture?

The solution that eventually curbed the power of the robber barons was of course straightforward antitrust law. The federal trade commission was created to ensure competition. Standard Oil was deemed a monopoly and was broken up. Price discrimination and corporate mergers were restricted. We could use the same playbook today, but it’s not looking likely with the amount of regulatory capture in America.

The fact that America can’t regulate companies wouldn’t matter so much if there was global governance of AI that applied to American companies. However there is no “global government” layer above the level of nation states; America can do what it wants because it is powerful. The League of Nations and the UN were attempts at global coordination after WW1 and WW2 respectively. Unfortunately, the United Nations is not a legislative body; it exists solely to foster cooperation between governments, it does not coerce them into doing anything.

Global AI governance is the only solution

We can try to sketch out in broad strokes what a solution would look like for global governance. Firstly, note that the minimal “state” is an entity that establishes a legitimate monopoly on violence in a geographical area. Good states are layered in terms of their levels of administration and enforcement, and problems are solved at the level at which they occur. For example: In the US, there is a federal police (FBI) that solves crimes that occur across state boundaries, but local law enforcement deals with crime within states.

So our global governance structure should be different from a “unitary world government” in its minimalist federalism. It should only exist to solve issues of global coordination - and in all other matters, it shouldn’t intervene. This is not a statement of cultural relativism, just a pragmatic acknowledgment of the difficulty of establishing such a structure. This idea of a minimalist, sovereign world government sounds far fetched, but it was seriously considered after World War Two. At the time it was called “Global federalism”. Einstein was a leading advocate for it after the development of nuclear weapons, alongside many other prominent thinkers.

If we could revive this idea of a federal union of nation states and establish one, coordination on AI could happen. A global social contract could be formed to avoid outcomes catastrophic to all. International legislation could be passed on the safe and ethical development of AI. This legislation could include information sharing, compliance testing, and regulations for development and deployment of AI systems. Although it is unlikely, this is in my current estimation our best bet to break the arms race dynamic and ensure we can cooperate to develop AI safely.

https://www-cdn.anthropic.com/7512771452629584566b6303311496c262da1006/Anthropic_ConstitutionalAI_v2.pdf

See (Deep reinforcement learning from human preferences) https://arxiv.org/abs/1706.03741, https://openai.com/index/learning-from-human-preferences/, https://openai.com/index/instruction-following/

(Cory Doctorow: Amazon is the apex predator of our platform era)

https://www.sltrib.com/opinion/commentary/2023/10/01/cory-doctorow-amazon-is-apex/