On Not Predicting the Future

Note: This is a version of a manuscript I'm working on which will eventually turn into my thesis proposal. I published another version of it (less technical) on my substack blog, where I post stuff for a general audience. This blog is for more personal / professional stuff.

The future of AI development is uncertain

The current AI situation is very confusing for policymakers. We are currently in the middle of a new paradigm; the transformer architecture has allowed us efficiently scale up models in different ways. Following these scaling curves has driven rapid progress on many benchmarks, as well as resulted in massive products like chatGPT. However, the current paradigm has flaws and expert opinion is divided on how far it will take us. If we want to make good policy about AI, we need to accept there is a fundamental uncertainty to how AI will develop, and prepare ourselves for many possible outcomes.

Among experts, there is no consensus on how fast capabilities will advance. There is evidence that short, medium, or long timelines for AI development are feasible, depending on how bullish one is on the current paradigm.

We cannot predict when fundamental breakthroughs in AI capabilities will occur. There is also a diffusion lag that means take time to come to market. This complicates the situation; what is currently the practical limits of AI may not reflect the theoretical limits of what we have discovered.

The hyper competitive market around AI may mean this is changing, at least in the case of generative AI (there is still a lag in safety constrained areas like robotics or medical devices). Now that the infrastructure is mature and we have huge competitive pressure, new advances such as reasoning models are quickly deployed. Even premature ideas like agents are being deployed and iterated on in public.

This makes it difficult to articulate robust policy interventions that will age well. Many policies are trade offs; a policy that is helpful in a certain timeline of AI development could be harmful in another timeline.

Therefore we explore in this thesis several concepts. One is scenario planning - a simple but mature technique of risk managment used by governments and national security agencies to identify key uncertainties in unpredictable situations, and build realistic scenarios based on combinations of these uncertainties. Next, we imagine policy responses to these scenarios, putting a premium on responses that age well in any case (so called low regret policies).

The means of current and future progress

Recent progress has been driven by scaling up different aspects of AI models. Scaling doesn't just mean making models bigger. There are several different scaling “laws”. You can scale data quality, data size, model size, and recently, thinking time (inference time).

Every year, AI developers scale up training compute by ~4x and dataset size by ~2.5x. The primary driver of training compute growth has been investments to expand the AI chip stock. This is because demand has outpaced improvements in chip performance - AI companies are hoarding chips moreso than semiconductor fabricators are improving them. According to the AISR, we should be able to scale up compute until 2030 (that would be 10,000x current levels), but after that we may run into problems due to bottlenecks in data, chip production, capital, and energy.

Performance increases logarithmically with both training resources and thinking time.

This is a concern for AI companies who worry about diminishing returns on scaling (which we may be starting to see with the relative "letdown" of models like GPT-5), but the idea is they can keep finding new things to scale, or scale existing things more intelligently.

A very important aspect which has many technological diffusion implications is 'training efficiency'. We are seeing roughly 3x improvement in efficiency per year.

There is also a lot of innovation around post training. A review of post training methods found that they can lead to large performance gains for the model while requiring very little compute.

Synthetic data allows us to get arbitrarily large datasets, but is mostly useful for domains where the synthetic data can be formally verified and filtered for quality, such as mathematics and programming.

Reasoning models take advantage of this; Reinforcement learning is performed on the model's chain of thought, which induces the model to independently learn to solve problems logically through step by step reasoning.

This can lead to very impressive results; an advanced version of Google’s Gemini model was able to achieve a gold medal in the international mathematical olympiad, a math competition for the world’s smartest high school students. Importantly, it did all in natural language, with no computer algebra system. This is genuinely impressive.

One downside of this approach is that pushing this aggressive reinforcement learning on verifiable rewards seems to degrade the model in other ways, such as increasing its hallucination rates.

The next wave of focus for companies is agents. These are autonomous systems, built on the idea of training current language models to iteratively use tools and receive environmental feedback as context in a loop. Agents are still too unreliable to really be usable, however there is a lot of investment in them as they are expected to be very economically valuable.

It is hard to evaluate effectiveness

A large part of the divide in expert opinion is possibly due to what we can call the measurement gap.

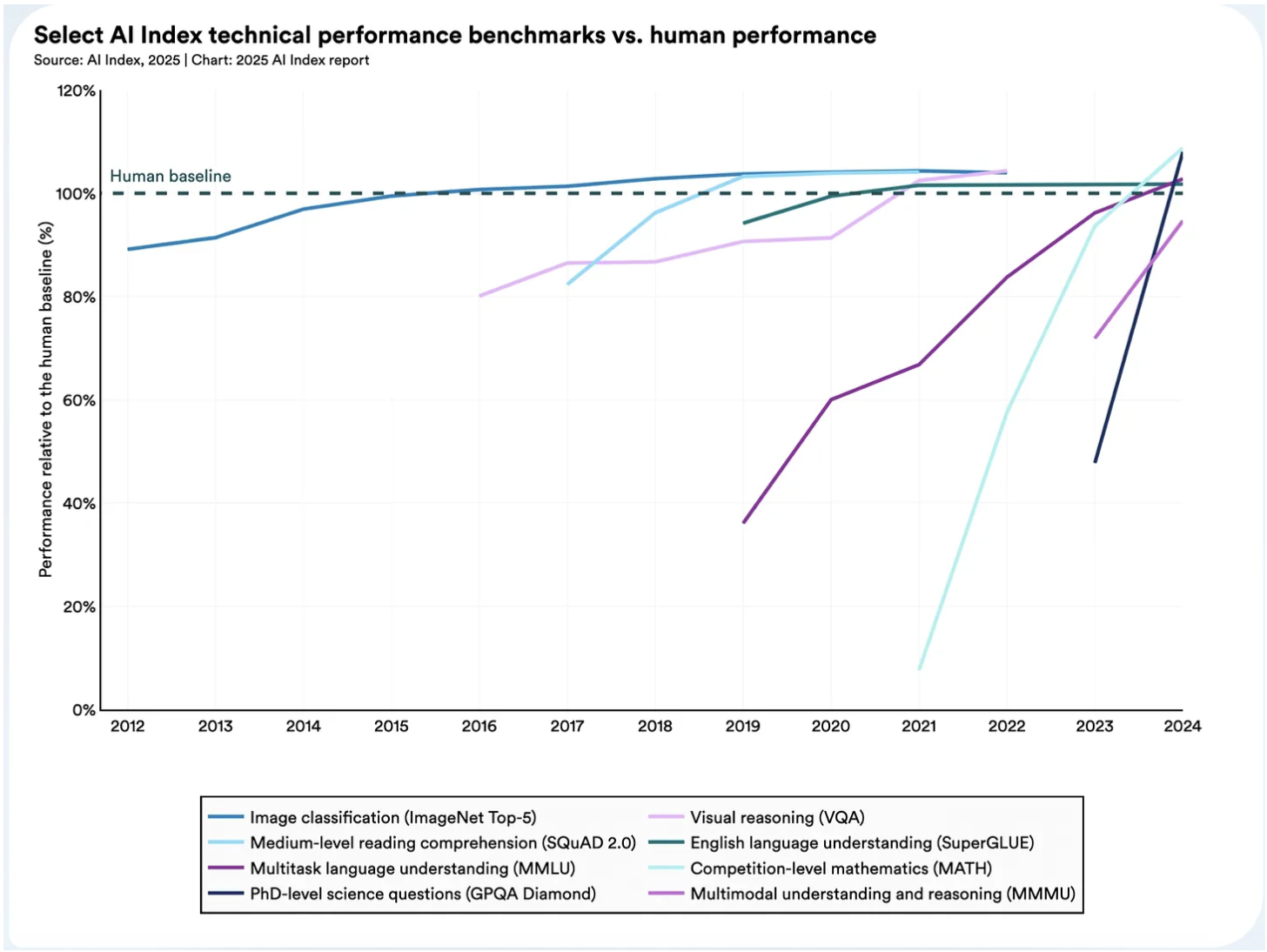

Recent progress has outpaced expectations, and many widely used benchmarks have been maxed out. However, models can still be very disappointing and fail in inhuman ways. AI benchmarks are useful for measuring algorithmic progress; but this is not the same thing as progress in usefulness. There is also a problem of anthropomorphism - AI is a different type of “mind” - it is both better and worse than humans in different ways, and mapping our internal conceptions of competence onto it can get us in trouble. So it is difficult to evaluate both from a quantitative and a qualitative point of view.

Benchmarks like SWE bench show that models can sometimes complete discrete, defined tasks, but that is only one part of performing a professional job. Models are not yet capable of the parts of a job that are less well defined and require creativity, judgement, context, etc. These “glue” aspects of a job that exist between the crisp boundaries of well defined, exam like tasks are precisely the things that would be most economically transformative if they were automated. However, benchmarks struggle to capture them; how do you give someone a “numerical score” on how good they are at their job? This measurement gap leads to a policy challenge: it's hard to tell 'how good' AI really is at stuff, because there are many important aspects of capability that aren't amenable to measurement. Overall, the current picture of AI is very murky, and the strategic landscape policy makers find themselves in seem wide open, precluding almost any possible outcome, including rapid progress or disappointment. This is especially the case in New Zealand, where we are somewhat isolated from the main centers of AI development (San Fransisco, Seattle, London,and Chenzen all being relatively far away.)

Scenario planning for AI

We’ve just recently been caught flat footed by another, much less transformative technology; social media. We failed to regulate the harms because we had the best case scenario in mind only. Instead of planning for different outcomes of the technology, we drifted into a disaster.

We need to be proactive with AI to avoid this happening. Instead of being ideologically tied to a certain scenario (i.e a pet theory) we should have multiple scenarios in mind, and then be mentally flexible and adjust our approach based on what happens.

The way governments traditionally do this in high stakes areas such as pandemic preparedness is through a mature risk management technique called scenario planning.

To do scenario planning, you start with a situation with several things you can't predict. For us, this is AI's future development and impact. There are many things we cannot predict about this, but in scenario planning, you drill down on what they call "key uncertainties" (KUs). Then, you build up different scenarios based on combinations of those KUs. It makes sense to focus on the most impactful potential outcomes (i.e tail risks), as there is less need to plan for a benign scenario where nothing bad happens. This means the scenarios often look overly negative, its important to note that this is not the "median" outcome expected of AI and that it will bring many transformative benefits as well as risks.

Then you build up different scenarios based on interesting combinations. There is a lot of expert feedback during this process to make sure the combinations are likely, interesting, and make sense.

Here's a practical example; the UK government just released the AI 2030 Scenarios Report. They identified five key uncertainties with regards to AI; capability, ownership concentration, safety, market penetration, and international cooperation.

They then imagined many different scenarios based on the values these variables could take. They then got rid of combinations that didn't make sense, and grouped the rest together into 5 key scenarios. They built these scenarios up to be compelling and detailed, and thought about their implications and possible policy responses.

The five scenarios produced by the UK AI2030 report explore likely combinations these uncertainties could take. It is key to note that what policy response would work in one scenario would be inappropriate or even harmful in another scenario, necessitating the ability to monitor to determine which scenario we find ourselves in, hence my post on the AI safety institute as a "regret free" policy (more on those in a future post).

This planning is already being undertaken around the world; by governments like the UK and the US, and by non-profits and researchers. Crucially, it is not enough for us to stand by and just take the recommendations of this or that plan. In New Zealand, we need to do our own planning. Primarily, this is because we are in a unique position as a country, and other plans will not work for us. But we also have a moral duty; as a developed country, we must contribute to illuminating the path forward. Instead of trying to predict the future, we need to build strong monitoring systems and develop robust plans that allow us to thrive wherever AI takes us.